X++ didn’t change much since I started to work with it eleven years ago, with a few exceptions such as event handlers in AX 2012.

That’s not true anymore in AX 7, because it comes with many new language constructs (and more are expected). If you’re familiar with modern object-oriented languages, and especially C#, you’ll find them very familiar, but some concepts will be completely new for many AX developers.

In general, X++ now utilizes many features supported by CIL and the syntax of new features closely follow C#, which is helpful for everyone who knows or is going to learn C#. To use the language correctly, you also have to be aware of some details of the runtime environment (CLR), such as how it handles garbage collection. You’ll find much more information written for C# developers than for X++ developers, therefore consider learning about common features from sources originally intended for C#.

Some new features help with implementation hiding and splitting the application to logical packages, which is really missing in AX these days (and AX7 isn’t the solution either, it’s just one more step in the right direction).

I’m not going to talk about every new feature, because you can already find that in AX7 wiki (Using X++ and debugger features) and mfp’s blog: (What is new in X++ in AX7?). Let me just mention a few things that I consider especially important or tricky.

This feature may not look very exciting, but is solves an important problem. Having all member variables protected (as in previous AX versions) means that any child class can access and change any variable defined in its parents. Classes often have member variables that they need for their own internal logic and that shouldn’t be exposed to anybody else.

Making variables private protects them from unexpected changes and it also make it easier for developers extending the class. For example, if a parent class has eight private fields and two protected, developers adding child classes have to about just two variables and not all ten. It’s obvious that the private ones aren’t intended for them.

Const represents values that never change. It’s a simple concept, but it’s nice to have it in X++, instead of using variables (which doesn’t prevent changes) and macros (which don’t have type and are really ugly).

Readonly member variables are more interesting, because you can set their value when creating an object and they can’t change afterwards. It’s a common scenario but there was no way to enforce it in previous versions. It also allows you to create immutable objects.

Static fields (member variables) share certain values across all instances of a class. It’s useful in some cases, but it’s also very dangerous for several reasons. Please try to avoid them, especially if you don’t understand what these two points mean:

- Static fields are often responsible for problems with memory allocation, when a static field refers to an object tree with a lot of data and the reference makes all these objects ineligible for garbage collection. If you have to make such a reference, weak references may help you in same cases.

- If the field is used in more than a single thread, its value may change at any point (unless you introduce some type of locking). If you set a value to a static field a second ago, you still can’t be sure that some other thread didn’t already change it to something else. This may lead to bugs that are very difficult to reproduce. Multi-threading issues aren’t limited to static fields, but they’re common with them, because the same value is automatically shared by all threads.

Finally block can be used after try in a similar way as catch. Code inside finally executes regardless of whether code in try finished normally or it threw an exception. It’s usually used to clean up resources allocated in try block.

Note that it’s completely valid to use try/finally without any catch clause (because you have exception handling at some higher level or you simply can’t handle it in any way).

try

{

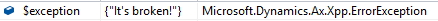

throw error("It's broken!");

}

finally

{

info("Let's clean up everything");

}This statement is in fact a shortcut for try/finally statement used for classes implementing IDisposable interface. It’s intended for releasing certain resources that CLR can’t manage automatically and that should be released as soon as possible (such as file handles). You want to be sure that regardless of happens (e.g. exception is thrown), you won’t leave a resource locked, memory allocated and so on. If the resource is wrapped in a class correctly implementing IDisposable and you instantiate the class with using, you’re safe.

You will meet the dispose pattern very often if you start using .NET types for streams, database queries and network communication, among others. Make sure that you correctly dispose all disposable objects, otherwise you can run out of database connections, for example. The using statement is here to make it easier for you.

Maybe you never needed anything like that before, but I think we’ll mix X++ and other .NET code much more often in AX 7, because it’s now so much easier, and therefore more developers will need this kind of knowledge.

Note that you technically can utilize using blocks for other purposes – all you need to implement IDisposable. The following example shows a custom class TimeMeasure used to measure how long does it take to execute code inside the using statement.

using (TimeMeasure m = new TimeMeasure())

{

// Do something here

}This is the implementation, which coincidentally shows several other new features in AX 7:

using System.Diagnostics;

class TimeMeasure implements System.IDisposable

{

private Stopwatch stopwatch = Stopwatch::StartNew();

public void Dispose()

{

stopwatch.Stop();

info(stopwatch.Elapsed.ToString());

}

}The using block starts with creating an instance of the class, which initializes the member variable. And when the block ends, Dispose method is executed.

Please take it as a demonstration of how the statement works, not as an encouragement to misuse IDisposable on regular bases. This technique is useful in some cases, but they aren’t very common.

Notice that my code above uses using in another context: using System.Diagnostics. That’s a completely unrelated thing, they merely use the same keyword.

Extensions methods provide a way to pretend that you added an instance method to a class, table, view or map without actually changing it.

For example, let’s say that I want a method to convert a list to a set. I can’t change the List class (because it’s defined in AX kernel), but I can easily write a static method doing the conversion for me.

Set set = List_Extension::toSet(list);

It works, but it’s not easy to read and you have to know about the existence of List_Extension class. I would prefer to do this instead:

And this is exactly what extension methods make possible.

To be considered an extension method, the method (and the class in which it’s defined) must meet certain criteria, which you can find on AX7 wiki. I’ll rather show you the actual implementation of toSet():

public static class List_Extension

{

public static Set toSet(List _list)

{

//TODO: throw error if _list is null

Set set = new Set(_list.typeId());

ListEnumerator enumerator = _list.getEnumerator();

while (enumerator.moveNext())

{

set.add(enumerator.current());

}

return set;

}

}Notice that it’s really just a static method that gets a list as its argument. It can’t access any non-public members as it could if it was an actual instance method added to the class.

Even if you have a class that you technically can change (unlike List in the example above), you still may want to use an extension method to avoid overlayering.

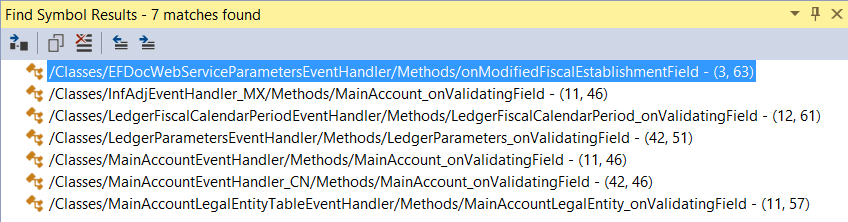

You don’t necessary have to create any extension method by yourself, but you must be at least aware of their existence. Otherwise you would wonder where certain methods come from.

These are really great additions to X++, aren’t they? And don’t forget that this is not an exhaustive list.